|

| September 07, 2021 | Volume 17 Issue 33 |

Designfax weekly eMagazine

Archives

Partners

Manufacturing Center

Product Spotlight

Modern Applications News

Metalworking Ideas For

Today's Job Shops

Tooling and Production

Strategies for large

metalworking plants

New 3D motion-tracking system could one day replace LiDAR in autonomous cars

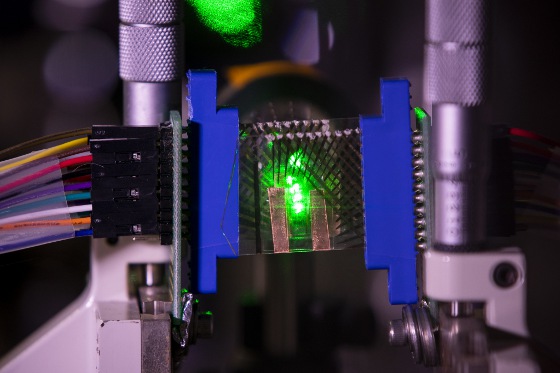

A graphene-based transparent photodetector array (acting as two layers of sensors in a camera) measures the focal stack images of a point object simulated by focusing a green laser beam onto a small spot in front of the lens inside a U-M lab. [Image credit: Robert Coelius/Michigan Engineering]

A new, real-time 3D motion-tracking system developed at the University of Michigan (U-M) combines transparent light detectors with advanced neural network methods to create a setup that could one day replace LiDAR and cameras in autonomous technologies.

While the technology is still in its infancy, future applications include automated manufacturing, biomedical imaging, and autonomous driving. A paper on the system has been published in Nature Communications.

The imaging system exploits the advantages of transparent, nanoscale, highly sensitive graphene photodetectors developed by Zhaohui Zhong, U-M associate professor of electrical and computer engineering, and his group. The detectors are the first of their kind.

"The in-depth combination of graphene nanodevices and machine learning algorithms can lead to fascinating opportunities in both science and technology," said Dehui Zhang, a doctoral student in electrical and computer engineering. "Our system combines computational power efficiency, fast tracking speed, compact hardware, and a lower cost compared with several other solutions."

The graphene photodetectors in this work have been tweaked to absorb only about 10% of the light they're exposed to, making them nearly transparent. Because graphene is so sensitive to light, this is sufficient to generate images that can be reconstructed through computational imaging. The photodetectors are stacked behind each other, resulting in a compact system, and each layer focuses on a different focal plane, which enables 3D imaging.

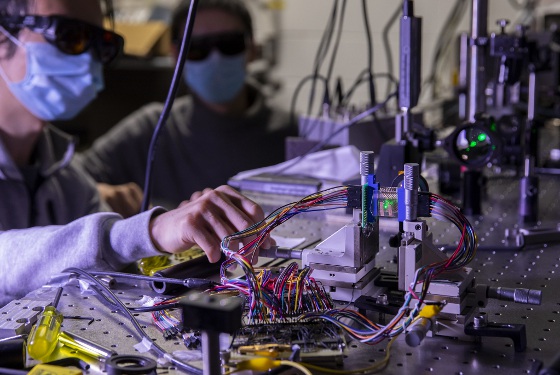

Zhen Xu, graduate student research assistant for Electrical Engineering and Computer Science (left), and Dehui Zhang, graduate student research assistant for Electrical & Computer Engineering, measuring focal stack images of a point object simulated by focusing a green laser beam onto a graphene-based transparent photodetector array. [Image credit: Robert Coelius/Michigan Engineering]

But 3D imaging is just the beginning. The team also tackled real-time motion tracking, which is critical to a wide array of autonomous robotic applications. To do this, they needed a way to determine the position and orientation of an object being tracked. Typical approaches involve LiDAR systems and light-field cameras, both of which suffer from significant limitations, the researchers say. Others use metamaterials or multiple cameras. Hardware alone was not enough to produce the desired results.

They also needed deep learning algorithms. Helping to bridge those two worlds was Zhen Xu, a doctoral student in electrical and computer engineering. He built the optical setup and worked with the team to enable a neural network to decipher the positional information.

The neural network is trained to search for specific objects in the entire scene and then to focus only on the object of interest -- for example, a pedestrian in traffic or an object moving into your lane on a highway. The technology works particularly well for stable systems, such as automated manufacturing, or projecting human body structures in 3D for the medical community.

"It takes time to train your neural network," said project leader Ted Norris, professor of electrical and computer engineering, "but once it's done, it's done. So when a camera sees a certain scene, it can give an answer in milliseconds."

Doctoral student Zhengyu Huang led the algorithm design for the neural network. The type of algorithms the team developed are unlike traditional signal-processing algorithms used for long-standing imaging technologies such as X-ray and MRI. That's particularly exciting to team co-leader Jeffrey Fessler, professor of electrical and computer engineering, who specializes in medical imaging.

"In my 30 years at Michigan, this is the first project I've been involved in where the technology is in its infancy," Fessler said. "We're a long way from something you're going to buy at Best Buy, but that's OK. That's part of what makes this exciting."

The team demonstrated success in tracking a beam of light, as well as an actual ladybug, with a stack of two 4 × 4 (16-pixel) graphene photodetector arrays. They also proved that their technique is scalable. They believe it would take as few as 4,000 pixels for some practical applications and 400-pixel × 600-pixel arrays for many more.

While the technology could be used with other materials, additional advantages to graphene are that it doesn't require artificial illumination and it's environmentally friendly. It will be a challenge to build the manufacturing infrastructure necessary for mass production, but it may be worth it, the researchers believe.

"Graphene is now what silicon was in 1960," Norris said. "As we continue to develop this technology, it could motivate the kind of investment that would be needed for commercialization."

The paper is titled, "Neural Network Based 3D Tracking with a Graphene Transparent Focal Stack Imaging System." The research is funded by the W.M. Keck Foundation and the National Science Foundation.

Source: University of Michigan

Published September 2021

Rate this article

View our terms of use and privacy policy